Now, a US Research Project to Detect Fake Media Products

Recognizing the havoc caused by public dissemination of fake information, the US Defense Advanced Research projects Agency (DARPA) has announced the Semantic Forensics (SemaFor) program to develop technologies that make the automatic detection, attribution, and characterization of falsified media assets a reality.

The goal of SemaFor is to develop a suite of semantic analysis algorithms that dramatically increase the burden on the creators of falsified media, making it exceedingly difficult for them to create compelling manipulated content that goes undetected.

“At the intersection of media manipulation and social media lies the threat of disinformation designed to negatively influence viewers and stir unrest,” said Dr. Matt Turek, a program manager in DARPA’s Information Innovation Office (I2O). “More nefarious manipulated media has also been used to target reputations, the political process, and other key aspects of society. Determining how media content was created or altered, what reaction it’s trying to achieve, and who was responsible for it could help quickly determine if it should be deemed a serious threat or something more benign.”

While statistical detection techniques have been successful in uncovering some media manipulations, purely statistical methods are insufficient to address the rapid advancement of media generation and manipulation technologies. Fortunately, automated manipulation capabilities used to create falsified content often rely on data-driven approaches that require thousands of training examples, or more, and are prone to making semantic errors. These semantic failures provide an opportunity for the defenders to gain an advantage.

The SemaFor program seeks to develop technologies that make the automatic detection, attribution, and characterization of falsified media assets a reality. The goal of SemaFor is to develop a suite of semantic analysis algorithms that dramatically increase the burden on the creators of falsified media, making it exceedingly difficult for them to create compelling manipulated content that goes undetected.

To develop analysis algorithms for use across media modalities and at scale, the SemaFor program will create tools that, when used in conjunction, can help identify, deter, and understand falsified multi-modal media.

SemaFor will focus on three specific types of algorithms: semantic detection, attribution, and characterization.

Current surveillance systems are prone to “semantic errors.” An example, according to DARPA, is software not noticing mismatched earrings in a fake video or photo. Other indicators, which may be noticed by humans but missed by machines, include weird teeth, messy hair and unusual backgrounds. Semantic detection algorithms will determine if multi-modal media assets were generated or manipulated, while attribution algorithms will infer if the media originated from a purported organization or individual.

Determining how the media was created, and by whom could help determine the broader motivations or rationale for its creation, as well as the skillsets at the falsifier’s disposal. Finally, characterization algorithms will reason about whether multi-modal media was generated or manipulated for malicious purposes.

“There is a difference between manipulations that alter media for entertainment or artistic purposes and those that alter media to generate a negative real-world impact. The algorithms developed on the SemaFor program will help analysts automatically identify and understand media that was falsified for malicious purposes,” said Turek.

SemaFor will also develop technologies to enable human analysts to more efficiently review and prioritize manipulated media assets. This includes methods to integrate the quantitative assessments provided by the detection, attribution, and characterization algorithms to prioritize automatically media for review and response. To help provide an understandable explanation to analysts, SemaFor will also develop technologies for automatically assembling and curating the evidence provided by the detection, attribution, and characterization algorithms.

The move comes a year prior to the 2020 US presidential election following Trump’s controversial 2016 win, often credited to spread of dubious content on platforms like Facebook, Twitter and Google. Hillary Clinton supporters claimed a flood of fake items may have helped sway the results in Trump’s favour. Following this, Mark Zuckerberg, CEO of Facebook, took measures to curb dissemination of fake news by allowing users flag content and enable fact-checkers to label stories in dispute.

Officials have been working to prevent outside hackers from flooding social channels with false information ahead of the upcoming election.

Even as recent as September 9, CNN carried a report that said “the US extracted from Russia one of its highest-level covert sources inside the Russian government” partly because “President Donald Trump and his administration repeatedly mishandled classified intelligence and could contribute to exposing the covert source as a spy,” while Obama had authorized the public release and declassification of a report by the Director of National Intelligence (DNI) prior to Trump's inauguration, alluding to this source’s placement in the Russian government.

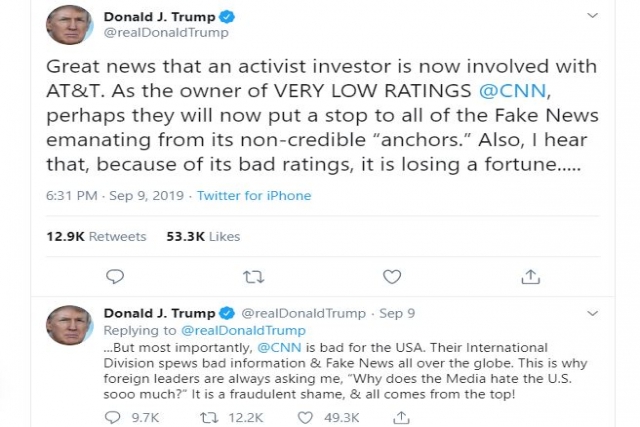

Responding to the news, Trump tweeted “CNN is bad for the USA. Their International Division spews bad information & Fake News all over the globe. This is why foreign leaders are always asking me, ‘Why does the Media hate the US sooo much?’ It is a fraudulent shame, & all comes from the top!”